Posts I have commented on:

Increased Challenges in Evaluating Information Online

Julian’s comment brought my attention towards advanced technology that allows video/audio manipulation. This contributes to our current challenge because not only can we not believe the news that we read, audios/videos are no longer as reliable too.

The video below proved how these tools can mimic public figures like Obama, and it is threatening if these tools land in the wrong hands.

Source: Youtube, Inspired by Julian

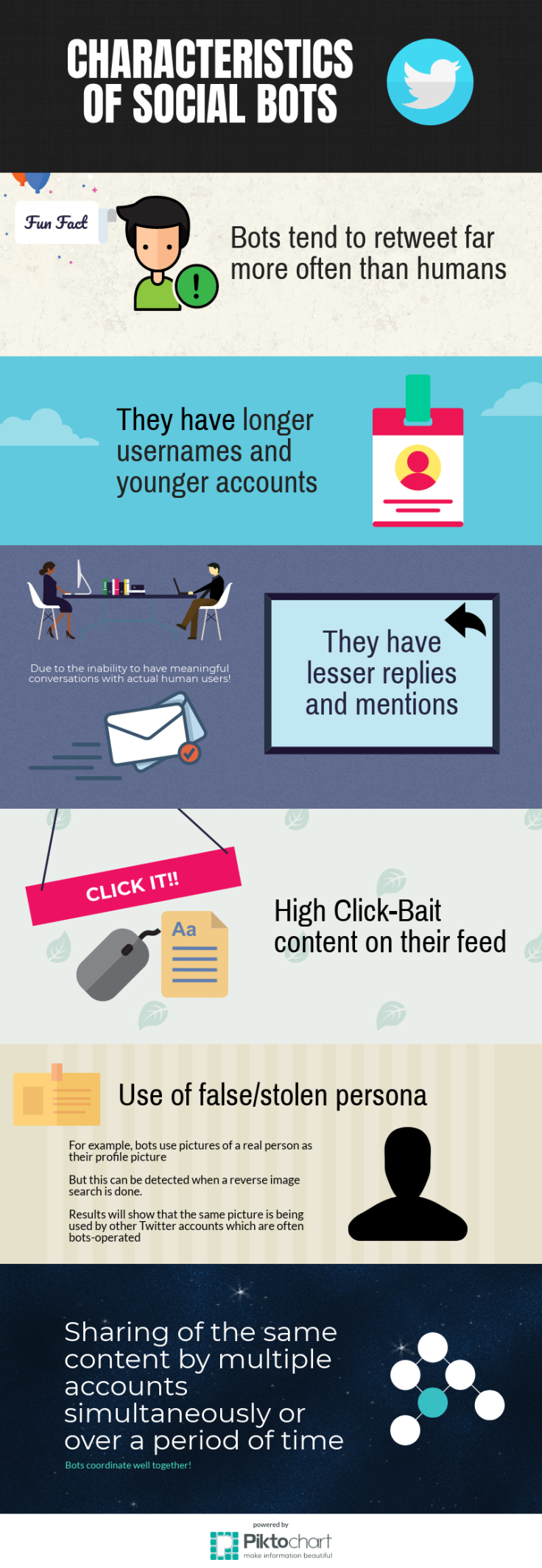

Marianne also mentioned how bots are capable of influencing our views as they behave like human and create logical content to spread rumors. What’s worse? We can’t identify them easily!

On Twitter, researchers from the University of Southern California estimated that 9%-15% of active accounts are bots! When used wrongly, they can cause serious damage like influencing politics.

So, what are some characteristics of these bots?

Source: Self-produced

So How Do We Better Evaluate Information?

Min Hui’s post identified how social media platforms verify official profiles of a person or brand by awarding a blue-checkmark “verified” badge to their account. Accounts that are verified provides higher authenticity to the information they provide, and this helps us evaluate news better.

For example, Twitter!

Source: Self-produced (with Screenshots from Twitter)

After interacting with Gordon Lockhart on Futurelearn, I agree that we must be mindful that the filter bubble exists so we can try to change our internet routine. For example, instead of using Google as a primary search engine, we can use alternatives like Duckduckgo which does not track our online behaviour! This ensures that the information fed to us is kept in a neutral perspective.

Source: Screenshot from DuckDuckgo

With Ying Zhen’s comment, he brought up a valid point in his post about how we can better manage our filter bubble, and I illustrated how we can achieve it with some of his insights in the picture below.

Source: Self-produced

To Conclude….

Source: Self-produced

Word Count: 313

References:

Solon, O. (2017, July 26). The future of fake news: don’t believe everything you read, see or hear. Retrieved November 17, 2017, from https://www.theguardian.com/technology/2017/jul/26/fake-news-obama-video-trump-face2face-doctored-content

ArXiv, E. T. (2014, September 19). How to Spot a Social Bot on Twitter. Retrieved November 17, 2017, from https://www.technologyreview.com/s/529461/how-to-spot-a-social-bot-on-twitter/

Newberg, M. (2017, March 10). As many as 48 million Twitter accounts aren’t people, says study. Retrieved November 17, 2017, from https://www.cnbc.com/2017/03/10/nearly-48-million-twitter-accounts-could-be-bots-says-study.html

Shaffer, K. (2017, June 05). Spot a Bot: Identifying Automation and Disinformation on Social Media. Retrieved November 17, 2017, from https://medium.com/data-for-democracy/spot-a-bot-identifying-automation-and-disinformation-on-social-media-2966ad93a203

About verified accounts | Twitter Help Center. (n.d.). Retrieved November 17, 2017, from https://support.twitter.com/articles/119135

Media Literacy – Learning in the Network Age – University of Southampton. (n.d.). Retrieved November 17, 2017, from https://www.futurelearn.com/courses/learning-network-age/3/steps/263021#fl-comments

Leave a comment